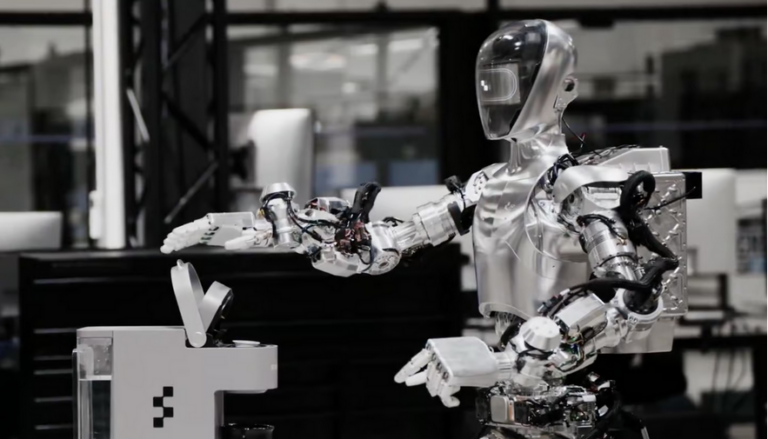

Figure’s Brett Adcock claimed a “ChatGPT moment” for humanoid robotics on the weekend. Now, we know what he means: the robot can now watch humans doing tasks, build its own understanding of how to do them, and start doing them entirely autonomously.

General-purpose humanoid robots will need to handle all sorts of jobs. They’ll need to understand all the tools and devices, objects, techniques and objectives we humans use to get things done, and they’ll need to be as flexible and adaptable as we are in an enormous range of dynamic working environments.

Why are so many companies “rightsizing”?

They’re not going to be useful if they need a team of programmers telling them how to do every new job; they need to be able to watch and learn – and multimodal AIs capable of watching and interpreting video, then driving robotics to replicate what they see, have been taking revolutionary strides in recent months, as evidenced by Toyota’s incredible “large behavior model” demonstration in September.

Continue here: New Atlas

Ask me anything

Explore related questions