Instagram is introducing “built-in protection” for teens as it updates its rules to provide the highest possible safety for underage users and reassurance to parents.

The new “teen accounts” will be introduced on Tuesday in the UK, US, Canada, and Australia, with Meta describing it as a “new experience for teens, guided by parents” and promising to better support and reassure parents that teens are safe with the proper protective measures in place.

The new settings come in response to increasing pressure and criticism aimed at social media companies to make their platforms safer for teenagers.

New Features

Specifically, teen accounts will change how Instagram operates for users aged 13 to 15, with certain settings being activated by default.

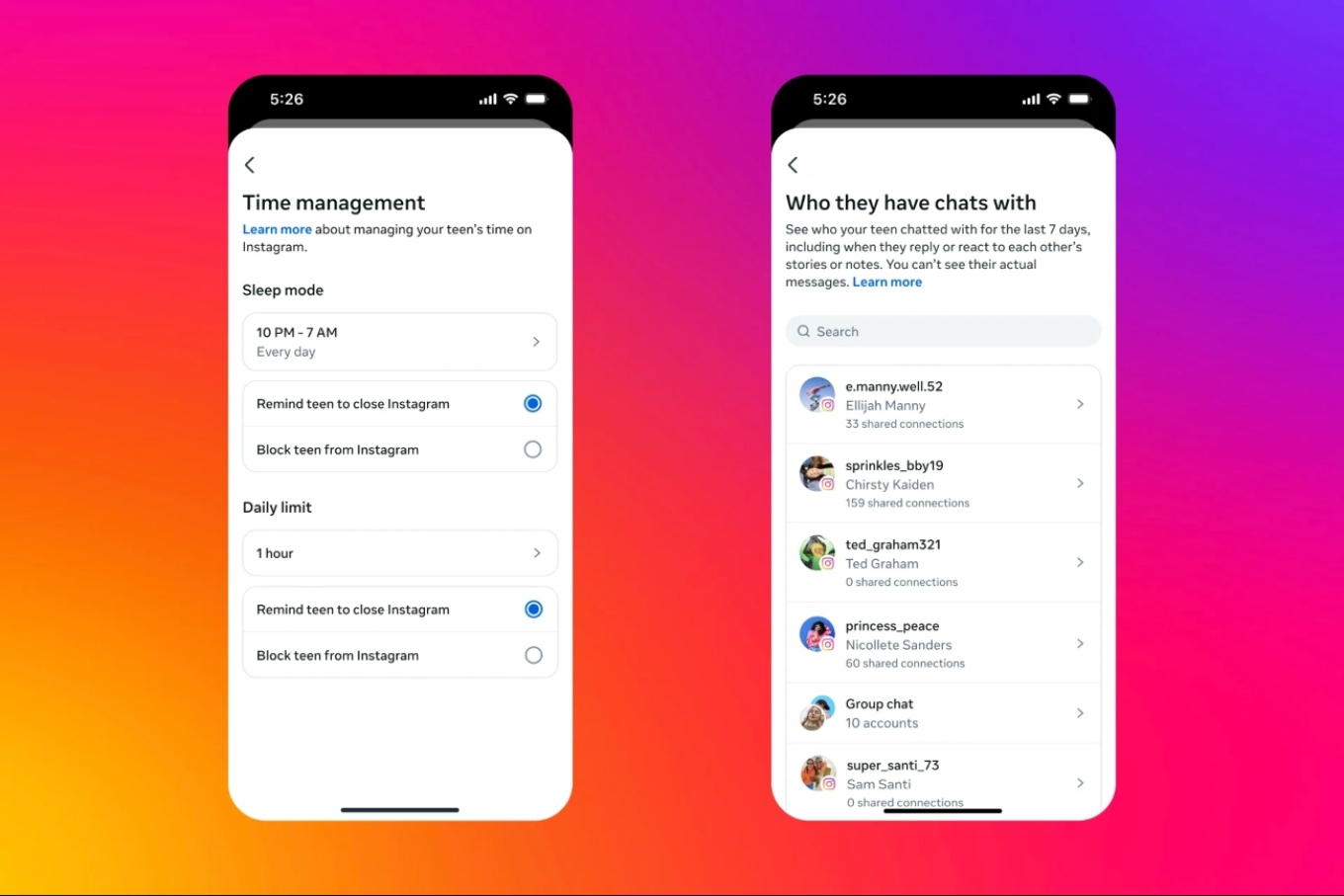

These accounts will feature strict controls on sensitive content to avoid recommendations of potentially harmful material, and notifications will be silenced during nighttime.

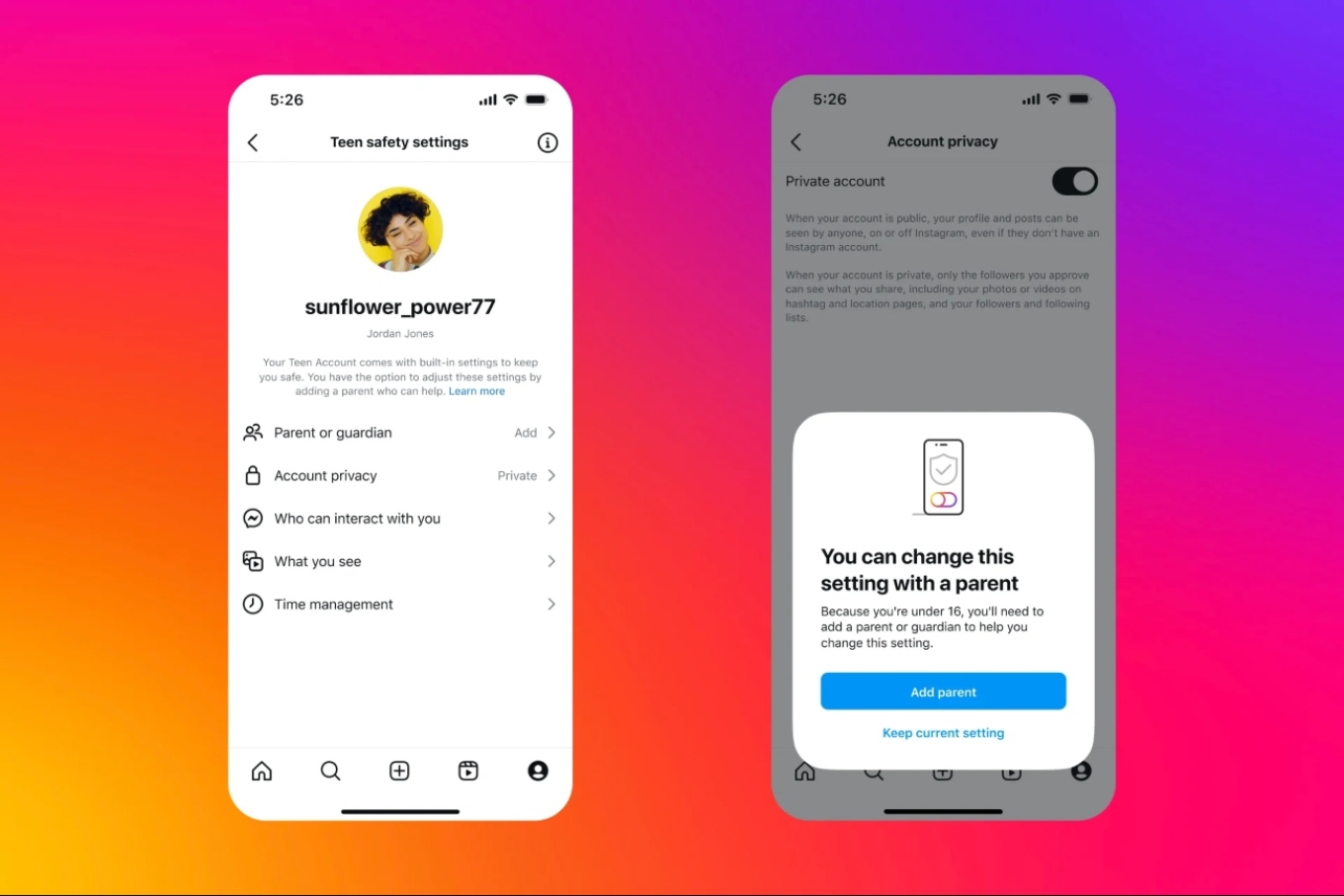

Additionally, accounts will be set to private by default, meaning teens will need to actively accept new followers, and their content won’t be visible to non-followers.

Changes to default settings can only be made by adding a parent or guardian to the account. Parents who choose to supervise their child’s account will be able to see who they are messaging and their declared interests, though they won’t be able to view message content.

Instagram stated that it will begin transitioning millions of existing teen accounts to the new experience within 60 days of informing them about the changes.

Age Verification

The system primarily relies on users being honest about their age, although Instagram already has tools that aim to verify a user’s age if there are suspicions they’re being dishonest.

Starting in January in the US, Instagram will also begin using artificial intelligence (AI) tools to proactively identify teens using adult accounts and move them to a teen account.

Exposure to Harmful Content Despite Measures

Instagram isn’t the first platform to introduce such tools for parents, and it claims to have over 50 tools aimed at teen safety.

In 2022, it launched a family center and parental supervision tools, which allowed parents to view who their child follows and who follows them, among other features.

Snapchat has a similar family tree feature, allowing parents over the age of 25 to see who their child is messaging and restrict their exposure to certain content.

Instagram also uses age verification technology to check the age of teens attempting to change their age to over 18 through a video selfie.

Despite these measures, questions remain as to why teens are still being exposed to harmful content, especially when studies show that despite precautions, children have been exposed to violent material.

Concerns About the Effectiveness of Tools

Instagram’s latest tools put more control in the hands of parents, who will take on even more responsibility in deciding whether their child should have more freedom or whether to monitor their activity and interactions more closely.

However, for parents to exercise control, they will need to have their own Instagram account. At the same time, parents can’t control the algorithms that push content toward their children or what is shared by billions of users worldwide.

Moreover, the UK media regulator Ofcom raised concerns in April about parents’ willingness to intervene for their children’s online safety. One of its findings highlighted that even when controls are created, many parents don’t use them.

Ask me anything

Explore related questions