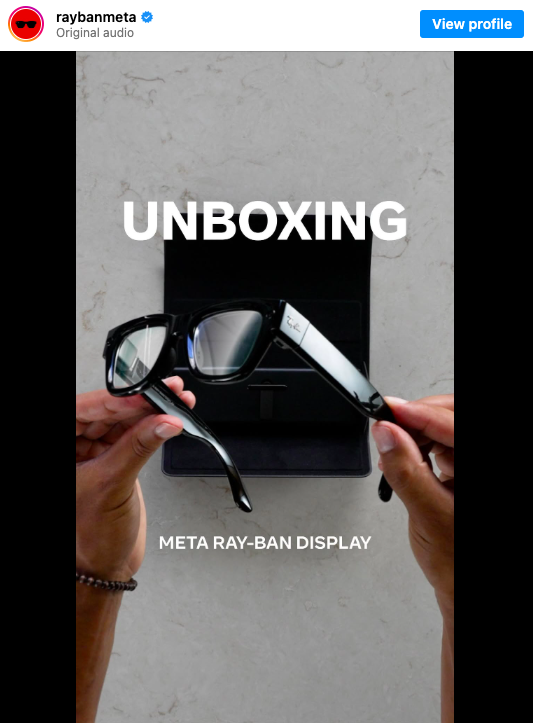

Meta Platforms Inc. on Wednesday, Sept. 17, unveiled its most ambitious step to date in the smart glasses category, unveiling the first version with an integrated display as part of its strategy to make this product an essential part of everyday life.

The new model, the $799 Meta Ray-Ban Display, features a screen on the right lens and enables the display of text messages, video calls, map instructions, as well as responses from Meta’s artificial intelligence. The discreet screen can also function as a camera viewfinder or a tool for playing music.

At the Meta Connect event in Menlo Park, California, CEO Mark Zuckerberg described the glasses as a means to achieve “superintelligence,” a term he used to describe the development of advanced artificial intelligence and the related research team within the company. “Artificial Intelligence must serve people and not be confined to data centers that automate parts of society,” he emphasized, setting the framework for the company’s future ambitions.

Meta has placed particular emphasis on the importance of the display in the new glasses, which it considers the key element that will allow users to gradually transfer core functions from their smartphones to the glasses. Andrew Bosworth, Chief Technology Officer, described them as the “first serious product” in the space, highlighting that their release marks a crucial step in creating a comprehensive consumer electronics ecosystem. In this way, Meta aims to compete with companies like Apple and Google, continuing the strategy it began in 2016 with the first virtual reality headsets.

“This device may allow you to keep your phone in your pocket more often during the day,” Bosworth said, explaining that the phone will not disappear, but the glasses provide easier access to essential functions. The new glasses also introduce an innovative control system. In addition to swiping on the frame, users can use gestures detected by the Meta Neural Band. Pinching fingers, thumb swipes, double taps, or hand rotations allow users to activate the AI voice assistant, adjust music volume, or control apps.

Furthermore, the glasses incorporate live captioning with real-time translations, video call functionality that allows sharing of visual perspective, and voice responses to messages. Soon, they will also support mid-air handwriting and environmental sound filters for clearer communication.

The release is scheduled for September 30, accompanied by the Neural Band, and the glasses will be available in two sizes and two colors. Distribution will occur through partners such as Ray-Ban, Lenscrafters, Best Buy, and Verizon. At launch, apps like Messenger, WhatsApp, and Spotify will be supported, while Instagram will gradually expand features from messaging to Reels.

The display has a 20-degree field of view, 600×600 pixel resolution, and up to 5,000 nits brightness, with compatibility for prescription lenses. The camera is 12MP and records 1080p video, the battery lasts six hours, and the charging case provides an additional 30 hours. The Meta Neural Band offers 18 hours of battery life and comes in three sizes.

The company has invested billions to ensure the project’s success, including a $3.5 billion investment in EssilorLuxottica for a 3% equity stake. Bosworth emphasized that the glasses represent an investment expected to generate returns, as Meta anticipates profits from both hardware and services.

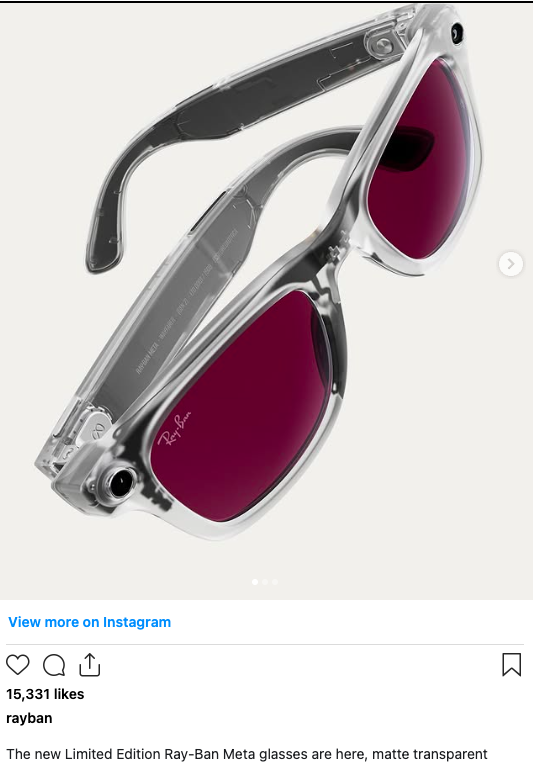

Additionally, new models without displays were introduced, including updated Ray-Ban glasses with 3K video and 40% longer battery life, starting at $379, as well as Oakley Vanguard glasses aimed at sports use, featuring enhanced speakers, slow-motion and time-lapse functions, and potential future walkie-talkie capability.

Meta sees these glasses as an intermediate step toward full augmented reality (AR), with a consumer-ready model expected in 2027. The market remains competitive, with Apple, Samsung, and Chinese companies like Xreal preparing similar devices. Bosworth estimates sales of over 100,000 units by the end of next year, noting that Meta has captured the spirit of the times amid the AI boom.

Alex Himel, project lead, stated that the AI glasses will achieve “mainstream appeal” by the end of the decade, while the company is already exploring future models with cellular connectivity, dual displays, and the possibility of creating a dedicated app store.

Ask me anything

Explore related questions