A few days ago, at Google’s annual I/O developer conference, the search giant revealed a new AI system called Duplex. The system interacts with Google Assistant and can essentially engage in simple conversational tasks via phone calls to businesses, such as scheduling a hair salon appointment, or making a reservation at a restaurant. Not everyone was happy with the groundbreaking presentation, though, and a subsequent outcry over the ethical implications of an AI voice basically tricking humans into thinking it was human has prompted Google to now suggest the product will be programmed to disclose its computer identity in all future uses.

The big hallmark of Google Duplex is the system’s ability to conduct natural sounding conversations. The system is programmed to have a quick response time and incorporate what Google refers to as “speech disfluencies” to sound more natural. This includes subtly calibrated “hmm”s and “uh”s to sound like a real person, and not the rigid mechanical computer voices we are generally used to.

The demonstration of the technology at the conference was both impressive and startling. The first example showed Duplex calling a hair salon and scheduling an appointment (about at 1 minute into the video above). The second example involved an even more complex conversation, with the system calling a restaurant to try to make a reservation. In the course of the conversation the system is told it wouldn’t need a reservation for that many people on that particular day. Understanding this, Duplex thanks the person on the other end and hangs up.

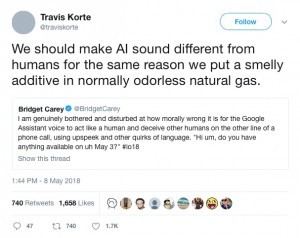

These examples of a human-sounding AI interacting with a real person are undeniably impressive, but the technology’s ability to so blatantly fool another human being into thinking it is real has left many unnerved. From suggestions Google had failed at ethical and creative AI design to more explicit accusations that the company was ethically lost, rudderless and outright deceptive, it seems something had gone drastically wrong.

Had Google entirely miscalculated how its new technology would be perceived? Was this a case of a Silicon Valley company developing a technology in a complete vacuum without realizing the real-world implications of its product?

In a blog published concurrently with the presentation, Yaniv Leviathan, Principal Engineer, and Yossi Matias, Vice President of Engineering, seem to be simply excited about the potential of their technology, suggesting it is a response to the frustrations inherent in, “having to talk to stilted computerized voices that don’t understand natural language.”

Very little understanding seems to have gone into comprehending the real-world repercussions of the technology, and in the blog and conference presentation, there is no mention of the technology being required to disclose its artificial identity. The only reference to transparency in the substantial blog post is: “It’s important to us that users and businesses have a good experience with this service, and transparency is a key part of that. We want to be clear about the intent of the call so businesses understand the context. We’ll be experimenting with the right approach over the coming months.”

Debate has raged since the revealing conference presentation over whether it should be a goal to even create an AI system that can accurately mimic humans. Erik Brynjolfsson, an MIT professor, suggested in an interview with the Washington Post that, “Instead, AI researchers should make it as easy as possible for humans to tell whether they are interacting with another human or with a machine.”

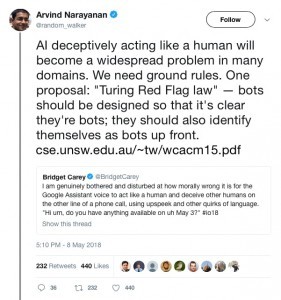

Arvin Narayanan from Princeton University suggested, “We need ground rules. One proposal: “Turing Red Flag law” — bots should be designed so that it’s clear they’re bots; they should also identify themselves as bots up front.”

It seems as though, on this issue, Google has catapulted the mainstream discourse into a place many were not ready to go. Apart from the obvious concerns over the technology being negatively utilized for telemarketing and robocalls, the ethical issue became paramount. Does AI need to identify itself when communicating with a human?

In response to the burgeoning controversy, Google released a statement claiming full disclosure will be built into the software.

“We understand and value the discussion around Google Duplex – as we’ve said from the beginning, transparency in the technology is important,” a Google spokeswoman reported to CNET. “We are designing this feature with disclosure built-in, and we’ll make sure the system is appropriately identified. What we showed at I/O was an early technology demo, and we look forward to incorporating feedback as we develop this into a product.”

While the technology itself is fascinating, perhaps the more interesting revelation from the past few days has been the vociferous public conversation. Natural voice interactions with computers until now were a far off sci-fi concept, experienced only in movies where people converse with AI systems such as HAL in 2001: A Space Odyssey. This week, for the first time, we have had to grapple with the actual reality of this development. Computers won’t sound robotic and talk in a stilted manner like Siri forever. Google Duplex has offered us a glimpse into the near future – it’s exciting, confronting and a little creepy.

Source: newatlas

Ask me anything

Explore related questions