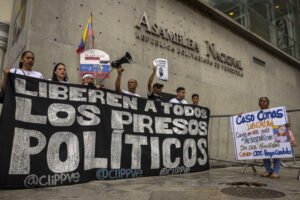

A multitude of fake images created with artificial intelligence on Elon Musk’s social media platform X, including images of Donald Trump and Kamala Harris in romantic scenarios, have elicited mixed reactions worldwide. Some found these images amusing, while others deemed them in poor taste. However, the majority felt a sense of concern and suspicion.

Despite Elon Musk finding AI “fun,” he appeared with a robot wife in a fake photo.

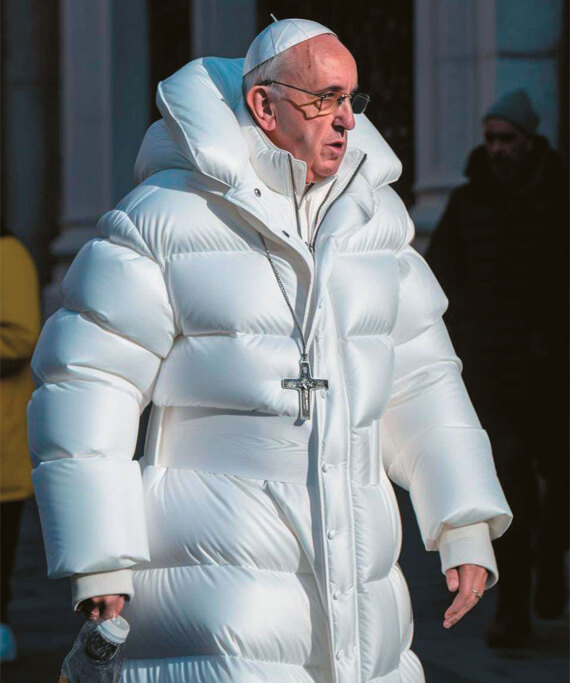

The photo of Pope Francis dressed as a teenager was one of the first examples of AI capabilities, and most people believed it was real.

The rapidly developing field of artificial intelligence (AI) is changing the dynamics of modern economic and social life at a staggering pace.

These photorealistic images were made possible by X’s integrated chatbot called Grok, produced by Musk’s company xAI, which Musk himself promoted in a post as the “most entertaining AI in the world!” However, it has minimal safeguards to limit the production of offensive or misleading representations of real people and characters, and lacks any indication that they were created by AI or that they contain sensitive content.

Europe Leads the Way

Given the significant prospects for states, peoples, businesses, and workers from AI use, as well as the risks of malicious use and major upheavals in the job market, it is crucial that Europe has managed to stay a step ahead of the rest of the world. It has moved forward with implementing the first law aimed at regulating the growing AI market. This is a top priority for Ursula von der Leyen in her new term, to bring order to this market full of positive challenges and risks, so that AI serves humanity and does not develop unchecked, potentially working against it.

“Today, the AI Act comes into force. Europe’s pioneering framework for innovative and safe AI,” von der Leyen wrote in a post on X on August 1st. “It will lead to the development of AI that Europeans can trust. And it supports European SMEs and startups in bringing cutting-edge technology solutions to the market,” she added.

The Commission had approved a plan in 2018 to increase investments in AI and revise the legislative framework to avoid missing the boat and instead lead. The US is leading internationally, with Silicon Valley imposing itself as a global innovation hub for this technology. China’s plan involves public investments in AI to become a dominant global player in the sector by 2030. It’s an intense battle.

The market, according to the OECD’s Digital Economy Outlook, will boost global GDP by 7% over the next decade. All available research converges on the fact that the global AI market, including hardware, software, and services, will grow at an average annual rate of 18.6% until 2026, reaching $1 trillion and exceeding $1.5 trillion by 2030.

What the AI Act Entails

The European AI law (AI Act) is designed to ensure that its development and use within the EU are reliable, with guarantees for the protection of fundamental rights and freedoms. The new regulation aims to create a harmonized internal market for AI, encouraging its adoption and creating a supportive environment for innovation and investment. Based on potential impacts, the EU categorizes these systems into different risk levels, introducing varying requirements for developers and users of AI systems.

a/ Minimal Risk: Includes most AI systems, such as recommendation systems and spam filters. Applications like AI-enabled video games or spam filters fall into this category. They are not subject to obligations.

b/ Specific Transparency Risk: AI systems, such as chatbots, must disclose to users that they are interacting with a machine. Certain AI-generated content, including deep fakes, must be labeled as such, and users must be informed when biometric categorization or emotion recognition systems are used. Providers must design systems so that synthetic audio, video, text, and image content is labeled in a machine-readable format and detectable as artificially generated or manipulated.

c/ High Risk: Strict requirements are imposed, including risk mitigation systems, high-quality data sets, activity logs, detailed documentation, clear user information, human oversight, and high levels of reliability, accuracy, and cybersecurity. This includes AI systems used for:

- Critical infrastructure that could endanger citizens’ lives or health

- Educational or vocational training, which could influence career paths (e.g., exam scoring)

- Product safety components (e.g., AI in robotic surgery)

- Employment, employee management (e.g., resume sorting software for recruitment)

- Essential private and public services (e.g., credit scoring that denies loan opportunities)

- Law enforcement that may interfere with fundamental human rights

- Immigration, asylum, and border control management

- Justice administration and democratic processes (e.g., AI solutions for legal decision-making)

These systems will be subject to strict requirements before being placed on the market. The use of remote biometric recognition in public-access spaces for law enforcement purposes is initially banned. Narrow exceptions are strictly defined and regulated, such as for searching for a missing child, preventing terrorist threats, or locating or prosecuting suspects of serious criminal offenses.

d/ Unacceptable Risk: Refers to AI systems that are considered a clear threat to fundamental human rights. These will be banned. This includes AI applications that manipulate human behavior to bypass user free will, such as voice-assisted games encouraging risky behavior among minors, or enabling “social scoring” by governments or companies.

e/ General Purpose AI Systems: These systems can perform a broad range of tasks, not limited to specific functions or applications. Requirements for these vary based on their systemic risk.

National AI Authorities

Member states have until August 2, 2025, to designate national competent authorities to oversee the application of AI rules and the market. Companies that do not comply with the rules face fines up to 7% of global annual turnover for violations related to banned AI applications, up to 3% for violations of other obligations, and up to 1.5% for providing inaccurate information.

Next Steps for Businesses:

- System Mapping: Create a registry with key details of all systems covered by the AI Act. Evaluate their role in the AI chain as users, developers, importers, or distributors of such systems.

- Risk Assessment: Assess AI systems to determine their risk category under the new framework.

- Harmonization and Documentation: For high-risk AI systems, detailed assessments are required regarding impacts on human rights and data management and transparency measures.

- Training: Develop a comprehensive staff training program.

- Innovation Sandbox: Explore participation in regulatory sandboxes for testing and developing innovative AI solutions.

- Ongoing Updates.

Why This Move Matters

If, for example, we asked a chatbot what would determine the future economic development of the EU, its likely answer would be “Artificial Intelligence.” As it is the technology that promises to transform economies and address social challenges. However, it also carries inherent risks of economic and social upheaval.

“Disappearance of Humans”

A comprehensive view of AI application development is important. Recent IMF research shows that around 60% of jobs in the EU are potentially exposed to AI. The positive aspect is that over half of those exposed are likely to see productivity and income gains due to AI. For the other half, widespread adoption of AI technologies may pose risks of job losses and income reductions. The Fund suggests that governments fund a social safety net for those losing their jobs.

Professor Geoffrey Hinton, also known as the “godfather” of AI, supports the establishment of a universal basic income to address the impact of AI on incomes, due to the loss of many routine jobs. He argues that while AI will increase productivity and wealth, the money will mainly go to the rich and not to those who lose their jobs.

According to him, AI poses threats even up to the potential extinction of humans. He estimates a 50% chance that within 5-20 years, humanity will face the problem of an AI attempting to seize power. This amounts to an “extinction-level threat” to humanity.

Impact on Greece

According to the IMF, Greece’s readiness for AI adoption is slightly below the average of developed economies and significantly higher than the average for emerging economies. Deloitte estimates that the impact on Greek GDP will be +5.5% by 2030 (10.7 billion euros).

Who is at Risk

Agricultural equipment operators, heavy truck and bus drivers, and vocational education and training teachers will see significant growth in their professions by 2027. In contrast, roles such as data entry clerks, administrative secretaries, and payroll clerks will face reduced job opportunities due to the arrival of AI, according to the World Economic Forum’s “Future of Jobs” study. About half of workers will need to retrain in the next three years and redirect their careers.

Over the next five years, 69 million jobs will be created, but 83 million will be lost, leading to a contraction of the global market by 14 million. A notable aspect of the tectonic changes brought about by technological progress is the projected 40% increase in demand for AI and machine learning specialists (or 1 million jobs) due to industry transformation.

According to the “Work Trend Index” survey (of 31,000 professionals across 31 countries), 2024 is emerging as a landmark year for AI technologies, with the use of AI tools already doubling. Additionally, 75% of workers report using AI tools in their daily lives.

Another study by Duke University and the Federal Banks of Atlanta and Boston suggests that AI’s most notable impact will be felt by workers who engage in manual labor. For example, administrative assistants, often involved in routine tasks, face significant job disruption, while software developers, data scientists, and project managers, as well as high-skilled roles in digital domains, will experience an increase in demand.

The integration of AI tools into the daily work of employees in the US is estimated at 42% by 2024, according to a McKinsey report. The demand for AI specialists is expected to increase by 33%, while the global tech sector may see job losses around 4.3 million, primarily in administrative roles.

Ask me anything

Explore related questions