Related Stories

In the past, before the reign of the Internet, the universal worship of social media, and the democratization of all kinds of digital enhancements (see filters), things were almost simple. At least one could generally distinguish between reality and fiction. In other words, one could understand if a celebrity’s photo on the cover of a magazine even slightly reflected reality or if it was the result of meticulous editing and craftsmanship that would make the masters of the great Flemish school envious. But even if we couldn’t discern the differences due to ostrich-like behavior, voluntary blindness, or simply inexperience, we had the solid certainty that the person depicted in a photograph was real, flesh and bone, standing before the camera, and captured with at least their silent consent.

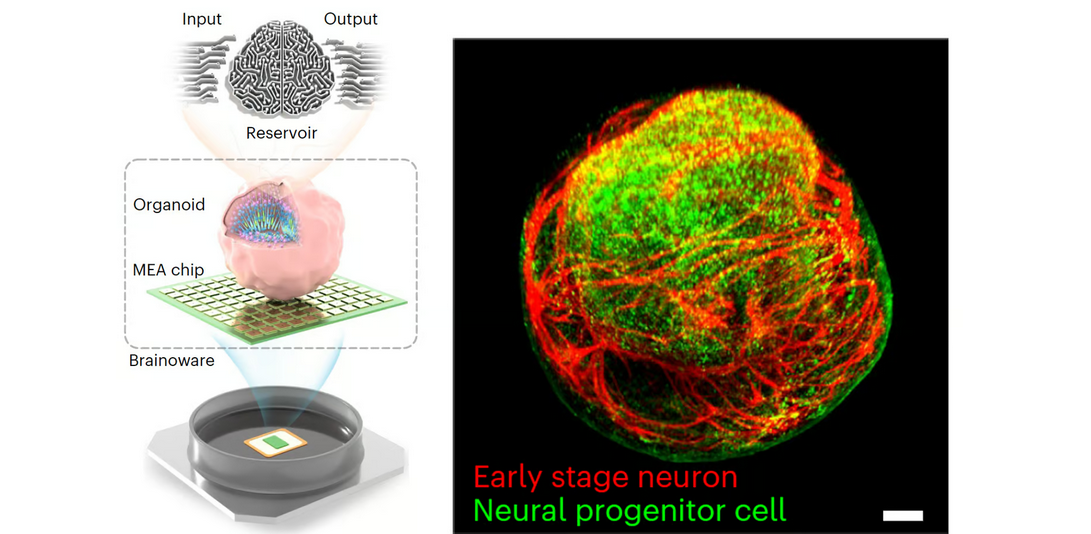

With Artificial Intelligence and its popularization through applications that one can have in the palm of their hand via their mobile phone, such as the famous DaVinci – Leonardo is spinning in his grave – no one can be sure that what their eyes behold equals the real, tangible, analog truth. Through similar apps, we can now – and even without having the training, knowledge, and Silicon Valley geek certification – resemble a better, worse, certainly different version of ourselves, have the ability to place ourselves in whatever background our heart desires, or participate in social media trends by dressing up as Brandon and Brenda from “Beverly Hills 90210,” but exactly the same can be done by others, and moreover, without our permission or consent.

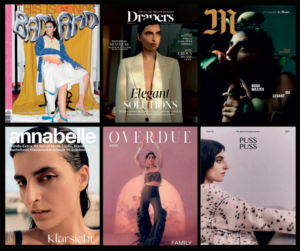

In the case of Nasia Matsa, the model – who just a few weeks ago added another campaign of the Louboutin house to her resume – and journalist – with articles in major British media outlets – the above realization came about in a rather harsh way. When, a few months ago, while waiting for the metro, she saw an advertisement for an insurance company featuring a woman who suspiciously resembled her to an alarming degree. Although she captured the image and stared at it for days, she decided, as she described in an article for the online publication Dazed, to delete it after a few days, almost convincing herself that someone hadn’t used her face for a campaign without her permission, but that it was an extreme similarity. A few months later, the story repeated itself.

And it was even as a joke. Matsa came face to face again with a poster of the same insurance company, in which she recognized the face of a colleague of hers, a model. The result of the equation was frightening but obvious. The two models had fallen victim to the theft of their faces, which, through the use of Artificial Intelligence and minimal interventions, had been placed on other – artificially generated by algorithms – bodies and used for commercial purposes. “Our faces have been transformed into digital dolls to promote a project in which we never participated. In even worse news, I can’t prove or do anything about it, as the regulations for Artificial Intelligence are still a gray area. In the end, who does my face belong to?” Matsa rightfully wonders in her article.

And this is a question that in the near future will collectively concern us. For now, it brings embarrassment and divides the fashion industry, the entertainment industry, and the celebrities who occasionally discover that their selves have fallen victim to deep fakes – the most recent example being that of Taylor Swift, whose manipulated nude photos flooded the X (formerly Twitter) and became a trending topic (no comment on humanity) before social media reacted with turtle-like steps. If to some the example of the American singer seems extreme – although it shouldn’t, as it actually adds to the list with Scarlett Johansson, Kristen Bell, and many other celebrities whose photos have suffered the same fate – or isolated, in the case of the fashion industry, the use of Artificial Intelligence has become serious.

Maritime tourism: Greece ranks first in yacht charters, overtakes France & Italy

The theft of Nasia Matsa’s face has come to remind and highlight the shift of companies and brands towards the use of non-existent models. Not because of their unreal proportions or extravagant beauty, but because they are made from bits and bytes. Already in 2023, Levi’s launched its first campaign featuring post-humans, models created on Lalaland.ai’s computers. This studio specializes in creating all kinds of models in any size, color, and characteristics desired by the client – now favoring “diversity” and “inclusiveness” – with prices starting from just 600 euros per month. Who knows if for the Naomi, Cindy, Bella, or Gigi of the future, we should not praise the genes of their parents’ union, but the creativity, and above all, the computational power of the algorithm?

The issue of using Artificial Intelligence is already a concern in Hollywood. During the recent five-month strike of screenwriters, the just struggle supported by actors, one of the main demands was the establishment of rules for the use of AI by film studios and on-demand platforms, which now have the upper hand.

Screenwriters are concerned that Chat GPT and similar machines will eventually replace or, at the very least, undermine their work since it’s humanly impossible to meet the demands of the mass production of television series. Actors, on their part, tremble at the mere idea that digital stunt doubles, just as photogenic as themselves, will soon become even more useful than them. Why should studios pay exorbitant sums to actors when they can have their digital holograms, which, as is known, can be programmed not to behave like divas?

A first-rate – and undoubtedly self-referential – comment on the ethical but ultimately practical issue that has arisen in the entertainment industry due to Artificial Intelligence was experienced by Salma Hayek. Or rather, the protagonist of the first episode of the sixth season of the series “Black Mirror”. In “Joan is Awful,” the Mexican actress and wife of French magnate François-Henri Pinault portrayed a hologram that revived the life and daily routine of a woman named Joan, who had granted an on-demand television platform the right to receive and exploit all her personal data – yes, she hadn’t read the terms and conditions.

The story had a happy ending as the hologram and the person joined forces to dismantle the supercomputer that orchestrated the transfer of real life to the small screen. Things are somewhat different in real life. After all, even in the case of Nasia Matsa, it wasn’t a machine that decided to steal her face and exploit it commercially without her consent. A person had the idea and another person pressed the button.