Humanity has a data storage problem: More data were created in the past 2 years than in all of preceding history. And that torrent of information may soon outstrip the ability of hard drives to capture it. Now, researchers report that they’ve come up with a new way to encode digital data in DNA to create the highest-density large-scale data storage scheme ever invented. Capable of storing 215 petabytes (215 million gigabytes) in a single gram of DNA, the system could, in principle, store every bit of datum ever recorded by humans in a container about the size and weight of a couple of pickup trucks. But whether the technology takes off may depend on its cost.

DNA has many advantages for storing digital data. It’s ultracompact, and it can last hundreds of thousands of years if kept in a cool, dry place. And as long as human societies are reading and writing DNA, they will be able to decode it. “DNA won’t degrade over time like cassette tapes and CDs, and it won’t become obsolete,” says Yaniv Erlich, a computer scientist at Columbia University. And unlike other high-density approaches, such as manipulating individual atoms on a surface, new technologies can write and read large amounts of DNA at a time, allowing it to be scaled up.

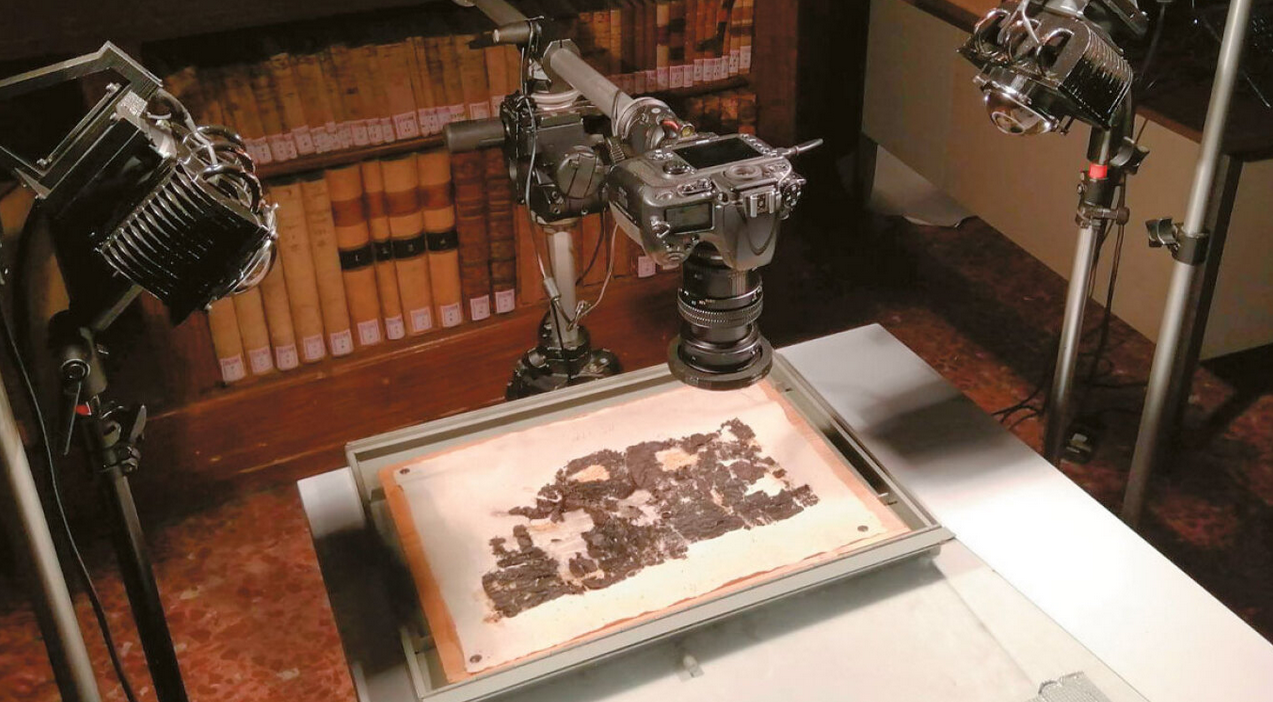

Scientists have been storing digital data in DNA since 2012. That was when Harvard University geneticists George Church, Sri Kosuri, and colleagues encoded a 52,000-word book in thousands of snippets of DNA, using strands of DNA’s four-letter alphabet of A, G, T, and C to encode the 0s and 1s of the digitized file. Their particular encoding scheme was relatively inefficient, however, and could store only 1.28 petabytes per gram of DNA. Other approaches have done better. But none has been able to store more than half of what researchers think DNA can actually handle, about 1.8 bits of data per nucleotide of DNA. (The number isn’t 2 bits because of rare, but inevitable, DNA writing and reading errors.)

Erlich thought he could get closer to that limit. So he and Dina Zielinski, an associate scientist at the New York Genome Center, looked at the algorithms that were being used to encode and decode the data. They started with six files, including a full computer operating system, a computer virus, an 1895 French film called Arrival of a Train at La Ciotat, and a 1948 study by information theorist Claude Shannon. They first converted the files into binary strings of 1s and 0s, compressed them into one master file, and then split the data into short strings of binary code. They devised an algorithm called a DNA fountain, which randomly packaged the strings into so-called droplets, to which they added extra tags to help reassemble them in the proper order later. In all, the researchers generated a digital list of 72,000 DNA strands, each 200 bases long.

more at: sciencemag.org